The emphasis every anti-money laundering (AML) vendor seems to place on artificial intelligence (AI) has increased the risk of “AI-washing,” where companies exaggerate or misrepresent their technical capabilities – something that is attracting growing scrutiny from regulators. A central concern is the need for explainable AI, which ensures AI-driven decisions are transparent and understandable.

In AML, explainable AI is crucial for understanding why certain alerts are generated, the rationale behind blocking or allowing a transaction, or the factors contributing to identifying a high-risk client. This transparency helps financial institutions (FIs) meet regulatory expectations and build trust by allowing clients, auditors, and regulators to see how and why their AI-driven decisions are made, reducing the risk of misrepresentation and improving accountability.

However, in our 2025 State of Financial Crime survey, an interesting contradiction was revealed: while firms expressed confidence in understanding regulatory expectations around AI, 91 percent of respondents still felt comfortable trading off explainability for greater automation and efficiency, which could contradict the transparency increasingly expected by regulators.

In light of this, compliance leaders need to find ways to ensure their AI tools meet both efficiency goals and regulatory requirements. This article explores:

- What explainable AI (XAI) is and why it matters in financial compliance;

- Regulatory expectations;

- Key benefits of XAI for improving transparency and accountability in AML;

- Challenges and limitations; and

- How ComplyAdvantage approaches explainable AI.

What is explainable AI (XAI)?

Explainable AI (XAI) refers to AI systems that make decisions and articulate the how and why behind those decisions. It is also a subset of responsible AI (RAI). While RAI covers the full spectrum of ethical and trustworthy AI practices, XAI focuses specifically on making AI systems transparent and their decisions understandable. For compliance teams, XAI is essential to ensure AI-driven outcomes are interpretable for stakeholders who need to validate and trust these systems.

To achieve this level of transparency, compliance teams often make use of specific XAI techniques, including:

- Rule-based models: Also known as “white-box machine learning models,” straightforward rule-based models apply predefined rules that make them naturally interpretable. For instance, flagging high-value transactions is a simple rule that compliance professionals can easily audit. However, due to a lack of nuance and flexibility, these models’ false positive rate is estimated at over 98 percent.

- Post-hoc explainability: While white-box models offer more transparency upfront, more complex “black-box models” tend to have higher predictive accuracy. These models often require post-hoc methods to allow compliance staff to trace risk scores back to specific input features like transaction type or customer history.

- Hybrid approaches: Combining rules-based and AI-driven techniques, hybrid models leverage both static rules and adaptable algorithms to improve detection accuracy while maintaining transparency. This balance helps compliance teams capture more nuanced suspicious activity while keeping explanations clear and accessible.

How can XAI improve AML systems?

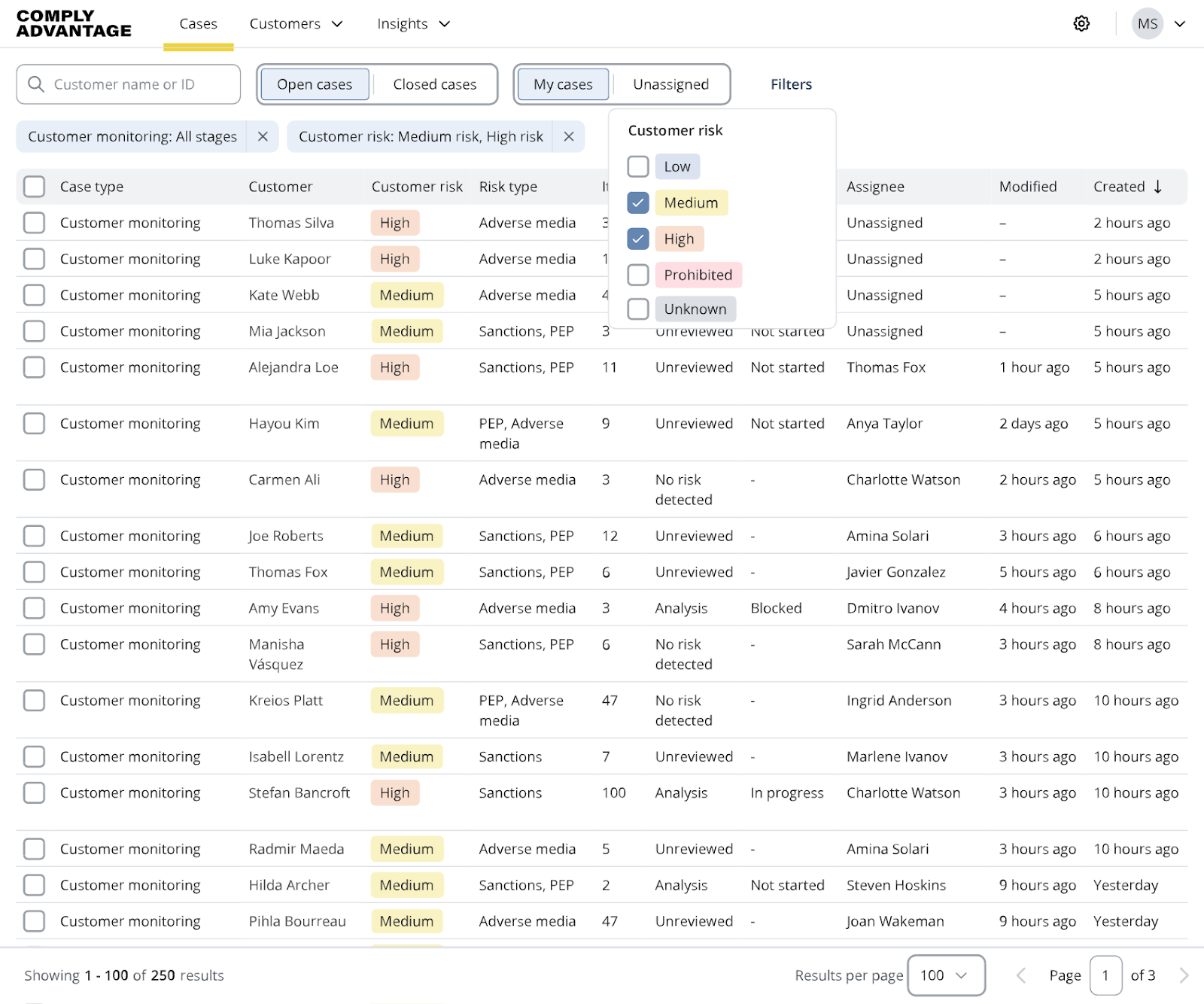

One of XAI’s primary benefits is its ability to improve alert quality and reduce false positives. Traditional AML models often generate large volumes of alerts, many of which are benign, overloading compliance teams and diverting resources from genuine risks. XAI provides more nuanced insights into each alert’s underlying factors, allowing FIs to refine their detection models and better prioritize cases that warrant deeper investigation.

XAI also significantly strengthens a firm’s ability to audit and justify decisions. For example, if a model identifies a suspicious transaction, explainable AI can articulate the factors driving the alert – such as transaction patterns or the customer’s historical risk profile. This detailed reasoning behind each alert enhances the institution’s readiness for regulatory scrutiny, as it enables compliance teams to confidently demonstrate why an alert was triggered and how their AML systems mitigate the risk of oversight or error.

One of our most practical uses of XAI is through our Advanced Fraud Detectors. Designed with client-facing transparency, the system goes beyond identifying suspicious behavior; it provides clear explanations for each alert, detailing the factors influencing the decision. This not only helps our clients justify actions but also enhances readiness for audits, as they can transparently address regulatory inquiries about specific alerts.

Iain Armstong, Regulatory Affairs Practice Lead at ComplyAdvantage

Moreover, explainability in AI models can help train and upskill compliance teams. When compliance staff understand the logic behind alerts, they can better analyze patterns and develop a deeper understanding of high-risk indicators. This helps build expertise across the team, enabling a more informed approach to AML beyond simple model outputs.

However, XAI doesn’t just enhance compliance and regulatory functions – it plays a crucial role in building better products.

The relationship between XAI and product excellence is mutually reinforcing, and it’s difficult to achieve a great product without focusing on these elements. At ComplyAdvantage, our model risk management approach not only helps us control risk and deliver XAI to clients but also plays a key role in building high-quality AI systems that underpin best-in-class products and services.

Chris Elliot, Director of Data Governance at ComplyAdvantage

Regulatory expectations surrounding XAI

The regulatory landscape surrounding AI and machine learning is continually evolving, which can create uncertainty for organizations seeking to adopt XAI. As AI technologies become more integral to AML and broader compliance practices, regulators worldwide are sharpening their focus on transparency, fairness, and accountability in AI-driven systems. FIs adopting these technologies must stay attuned to emerging legal standards and best practices, which increasingly emphasize XAI.

While jurisdictions like the European Union, United Kingdom, and United States have different regulatory frameworks, they share a common concern: ensuring AI applications are transparent and fair, especially in high-stakes sectors like AML.

European Union

Considered “the world’s first comprehensive AI law.” the EU AI Act establishes expectations for transparency, governance, and accountability in AI-powered AML solutions. Having entered into force in August 2024, the Act requires FIs to ensure transparency in their decision-making processes, including clarifying why certain transactions are flagged as suspicious. Additionally, the Act emphasizes the importance of robust data governance frameworks that mandate high-quality data to minimize inaccuracies and bias.

The EU’s General Data Protection Regulation (GDPR) complements these AI-specific standards by enforcing stringent data privacy controls that intersect with XAI goals. GDPR’s “right to explanation” gives individuals insight into automated decisions that affect them, a standard that dovetails with the transparency requirements of the EU AI Act.

United Kingdom

In the UK, the regulatory approach to AI in financial services has been shaped by institutions like the Financial Conduct Authority (FCA) and the Bank of England, which have jointly addressed the need for responsible AI. While the UK has not yet enacted an AI law on par with the EU’s AI Act (as of November 2024), these regulators have issued guidelines highlighting the importance of explainability, particularly for high-risk applications like AML.

The FCA has emphasized in its guidance that AI and machine learning applications must be interpretable, especially when they affect consumer rights or regulatory compliance. The FCA and Bank of England’s Machine Learning in UK Financial Services report underscores that FIs should implement governance frameworks that oversee AI models, including transparency around decision-making processes. They recommend that larger institutions lead by example in adopting advanced analytics, recognizing that smaller firms may face challenges in implementing these standards due to resource constraints.

Moreover, the UK’s proposed AI regulatory framework, introduced in the government’s National AI Strategy, encourages a “pro-innovation” approach that balances AI’s potential with ethical considerations like fairness and transparency. To do this effectively, the UK government has indicated plans to avoid “over-regulation” but will likely monitor AI developments and may impose stricter controls over time.

United States

No federal law specifically regulates AI in financial services in the United States. However, several agencies, including the Federal Reserve, the Office of the Comptroller of the Currency (OCC), and the New York Department of Financial Services (NYDFS), have issued guidance and policy statements that touch on AI transparency and fairness in FIs.

In 2024, the US Treasury Department also introduced a National Strategy for Combatting Terrorist and Other Illicit Financing, highlighting AI technologies’ transformative potential in enhancing AML compliance. This strategy underscores how AI can analyze vast amounts of data to uncover patterns related to illicit financing. Additionally, the Treasury issued a Request for Information (RFI) in June 2024, aimed at gathering insights on the use of AI in the financial sector, particularly concerning compliance with AML regulations. The RFI focuses on the opportunities AI presents and the associated risks, such as bias and data privacy concerns. This proactive stance indicates a commitment to shaping future regulations and guidance that prioritize transparency and fairness in AI applications within financial services

Benefits of using explainable AI for AML

As FIs look to strengthen their AML capabilities, XAI presents a range of unique benefits that enhance operational efficiency and regulatory compliance, including:

- Reduced operational burden: By improving the quality of alerts and decreasing false positives, XAI helps compliance teams focus their resources on genuine risks rather than sifting through a high volume of alerts that require minimal action. This streamlining can lead to more efficient allocation of human resources.

- Stakeholder engagement: XAI can enhance communication with external stakeholders, such as regulators and clients. Clear explanations of the rationale behind decisions allow FIs to engage more effectively in discussions around compliance practices and risk management strategies.

- Support for continuous improvement: The insights gained from XAI can inform ongoing enhancements to AML strategies. By analyzing the reasons behind alerts and outcomes, organizations can refine their detection models and policies, fostering a culture of continuous improvement in risk management.

- Boosted client trust: FIs can foster greater trust in their AML practices by utilizing XAI. Transparency in decision-making reassures clients that their FI is diligent and fair in its monitoring processes.

Challenges and limitations of XAI

While XAI offers numerous benefits, its implementation is not without challenges. Organizations must navigate a complex landscape of technical, regulatory, and operational hurdles to integrate XAI into their compliance frameworks effectively. Understanding these limitations is essential for FIs as they seek to balance transparency with efficiency and effectiveness. Some primary challenges include:

- Data privacy: Ensuring privacy while maintaining transparency can be a delicate balance. For instance, GDPR regulations in Europe mandate strict data protection measures. Organizations must navigate these regulations while providing sufficient transparency in their XAI outputs, which can be challenging when personal data is involved.

- Bias: If the data used to train XAI models is biased or unrepresentative, the explanations generated may perpetuate existing biases, leading to inaccurate or unfair outcomes. In January 2024, the New York Department of Financial Services (NYDFS) raised concerns about algorithmic bias in financial technologies, emphasizing the need for fairness and accuracy in automated decision-making processes.

- Dependence on quality data: XAI’s effectiveness relies heavily on the quality and accuracy of the data it processes. Poor-quality data can lead to misleading explanations and decisions, undermining the very purpose of using XAI. Organizations need to invest in data governance and management practices to ensure their systems function correctly.

How does XAI work in an AML system?

In AML, explainability can be integrated in multiple ways to create a transparent and auditable system, including:

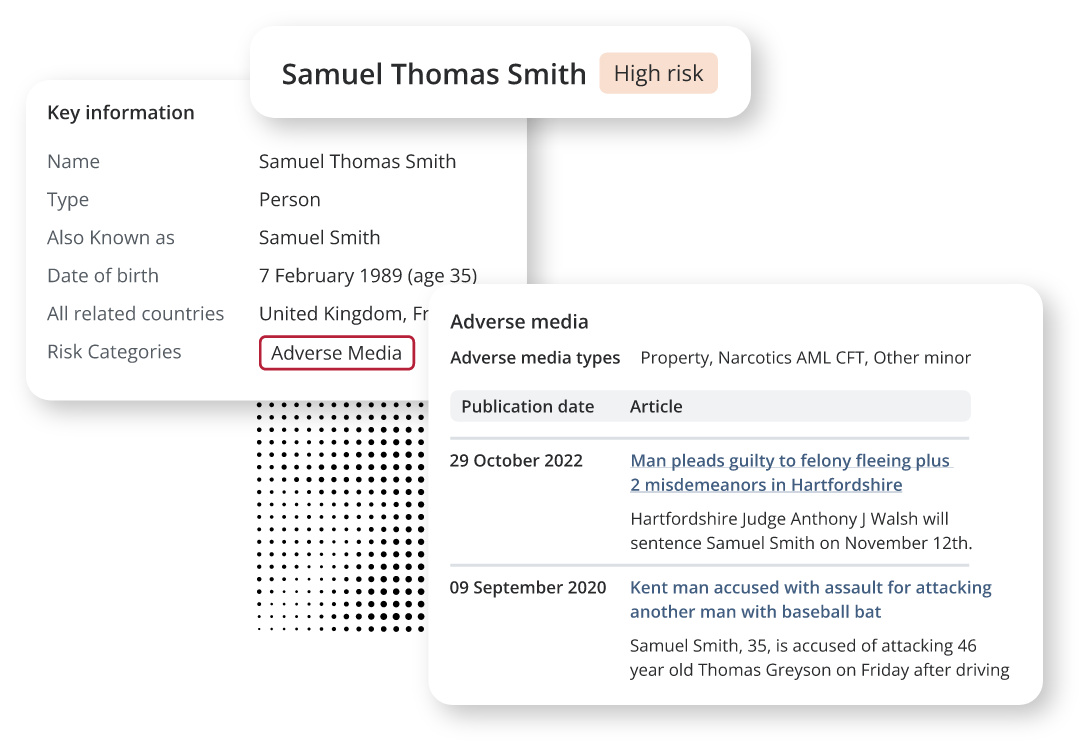

- Data ingestion and entity resolution: By consolidating information from multiple sources, AML systems can provide a comprehensive view of entities. XAI can play a critical role here, clarifying how entities are identified and linked to relevant data points, such as transactions, relationships, or risk indicators. For example, suppose an individual is flagged as high-risk due to adverse media. In that case, XAI systems can clearly break down these triggers, helping compliance teams trace data origins and make informed decisions.

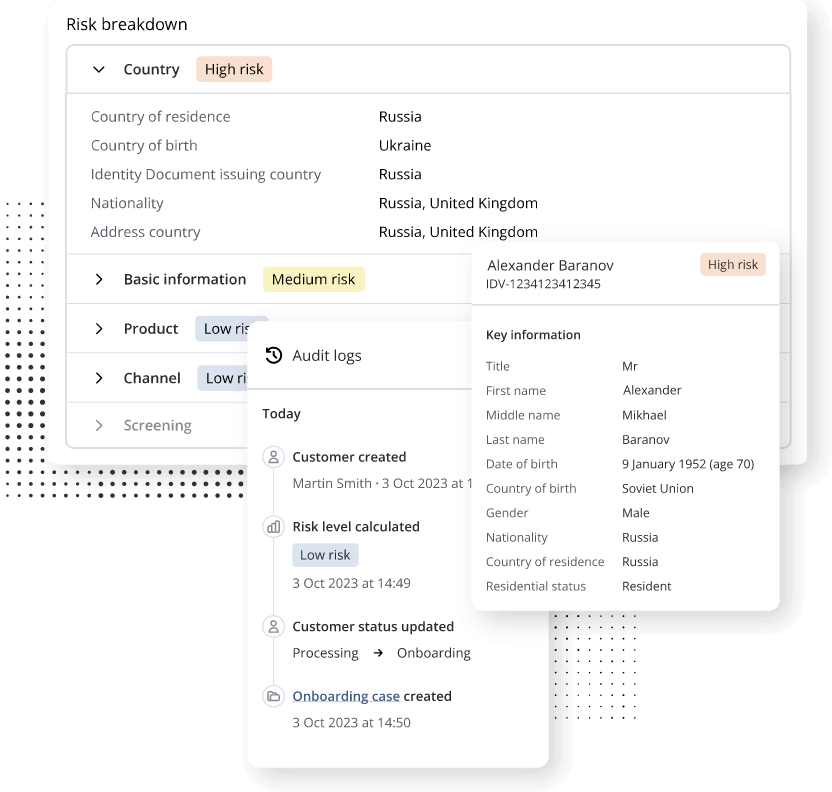

- Risk assessment and scoring: Risk assessments are a critical component of every AML program, involving the evaluation of data to determine the potential risk associated with specific entities or transactions. XAI facilitates this process by using explainable models that allow users to trace the factors influencing risk scores. As shown in the image below, these models provide a risk breakdown into how specific attributes contribute to an overall risk assessment. This level of detail is vital for compliance officers, as it empowers them to make decisions based on transparent criteria.

- Alert generation with explainability: When an AML system generates alerts for potentially suspicious activities, XAI plays a crucial role in ensuring these alerts are accurate and accompanied by clear explanations. Each alert can include details about the specific risk indicators that triggered the alert, providing compliance teams with context and facilitating efficient follow-up actions. This transparency is essential for regulatory reporting and justifying decisions made in response to alerts.

Tips on how to tell if an AML vendor uses XAI

By asking the right questions, organizations can better assess the transparency and accountability of the AI solutions they are scrutinizing. The following questions, in particular, can serve as a good starting point for this discussion:

- What methodologies does your AI system employ for decision-making?

Understanding the underlying algorithms and models the vendor uses can provide insight into whether their AI is explainable. Ask if they utilize rule-based systems, interpretable models, or hybrid approaches that combine multiple techniques. A commitment to transparency in methodology is a good indicator of XAI practices.

- How do you provide explanations for risk assessments and alerts?

Vendors should be able to articulate how their system generates explanations for risk scores and alerts. Ask for examples of how these explanations are presented to compliance teams, including the specific factors considered in the decision-making process.

- Can your system demonstrate audit trails for decisions made by the AI?

A robust XAI system should offer traceability for its decisions, allowing compliance professionals to review the rationale behind alerts and risk assessments. Inquire whether the vendor maintains detailed logs of the decision-making process, which can be critical during regulatory audits and compliance reviews.

- What measures are in place to ensure the fairness and accuracy of your AI models?

Understanding how vendors address bias and accuracy in their AI systems is crucial. Ask about the data sources used for training their models, how they ensure data quality, and the steps taken to mitigate bias in decision-making. This is especially relevant in light of regulatory expectations for fairness in automated systems.

- How do you handle model updates and validation?

Continuous monitoring and validation of AI models are essential to maintain their accuracy and reliability over time. Compliance professionals should ask how the vendor ensures their models are regularly tested and updated and whether they explain model performance changes.

How does ComplyAdvantage approach explainable AI?

At ComplyAdvantage, our approach to responsible AI, and by extension XAI, is based on and aligned with the Organisation for Economic Co-operation and Development (OECD)’s AI Principles and the UK’s “A pro-innovation approach to AI regulation: government response” whitepaper. Of the five core themes that lie at the heart of our understanding and implementation of responsible AI, two relate explicitly to XAI:

- Transparency and explainability: This means we record and make information available about our use of AI systems, including purpose and methodologies.

- Safety, security, and robustness: This means decisions made by our AI systems are explainable, and explanations can be accessed and understood by relevant stakeholders (e.g., users or regulators).

This approach underpins the development of solutions that not only meet regulatory expectations but also deliver meaningful insights.

ComplyAdvantage believes that responsibly developing and managing AI is not only the right thing to do but also leads to better products that engage AI. Responsible AI is best when viewed as part of a best practice and thereby improves outcomes for our clients and their customers. In this way, it is aligned with business needs and not an external force acting on existing processes and competing with priorities.

Chris Elliot, Director of Data Governance at ComplyAdvantage

For more information on ComplyAdvantage’s approach to model risk management, read the full statement here.

Get a 360-degree view of financial crime risk with ComplyAdvantage Mesh

A cloud-based compliance platform, ComplyAdvantage Mesh combines industry-leading AML risk intelligence with actionable risk signals to screen customers and monitor their behavior in near real time.

Get a demo

Originally published 25 November 2024, updated 11 February 2025